Day 9: Advanced Docker

Docker Volume, Docker Compose, Docker Swarm, Docker Stack

Docker Volume

🧊 Generally, the container contains an application and all of its dependencies, such as libraries, binaries, and configuration files, packaged together in a single executable package. This allows for the application to be run consistently across different environments and platforms.

🧊 So when we remove the container, all of its data will be gone. If we use volume it stores container data persistently. So whenever the container is removed then its data will persist. We need to share the data with our system from the container.

🧊 It will create a bridge between our system and the container. So if data in the container is added it will be added to the volume. Also if data in the volume is added then it will be added to the container.

Creating Docker Volume

🧊 To create a new Docker volume, you can use the docker volume create command. For example, to create a volume named "myvolume", you would run:

# my volume is the volume name

docker volume create myvolume

🧊 Once the volume is created, you can use the docker volume ls command to see a list of all volumes on your system.

🧊 To mount a volume to a container, you can use the -v or --mount option when running the docker run command.

For example, to run a container named "mycontainer" and mount the "myvolume" volume to the "/data" directory within the container, you would run:

#jenkins is the name of the image

docker run -it --name mycontainer -v myvolume:/data jenkins

You can also use the following command to inspect the volume

Copy codedocker volume inspect my_volume

And when you no longer need the volume you can remove it by using the command

Copy codedocker volume rm my_volume

Docker compose

Docker Compose is a tool that is used to define and run multi-container Docker applications. It allows you to use a YAML file to configure the services, networks, and volumes that make up your application, and then start and stop the application using a single command.

We can run the container without using run command.

Install docker-compose if it is not available. By default it wont be there.

sudo curl -L "https://github.com/docker/compose/releases/download/1.29.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

or

sudo apt-get install docker-compose

docker-compose --version

Create an YAML file

run the docker compose using

sudo docker-compose up

It will run the images .

you can see the apps on the particular ports allocated in the file.

ex: we used nginx image, we need to see this app by 80 port

To stop the services use

sudo docker-compose down

Docker Swarm

Docker Swarm is a tool for container Orchestration.

Group of machines that are running docker and joined into a cluster.

We will have docker machines connected to each other and can manage by using a single machine.

🧊 Lets say you have 100 containers

🧊 You need to perform

🧊 health check on every container

🧊 Ensure all the containers are up on every system

🧊 Scaling containers up or down depending on the load.

🧊 Adding updates/changes to all of these containers.

🧊

Orchestration tools available: Docker swarm, Kubernetes, Apache Mesos.

Important commands used in docker swarm:

👉sudo docker node ls is a command that can be used to list all of the nodes in a Docker Swarm. It will display information about each node, including the hostname, IP address, and the status of the node (e.g. whether it is a manager or a worker).

It is important to note that the docker node ls command can only be run on a manager node in the swarm, as only manager nodes have access to the swarm's raft database where all the swarm information is stored.

👉To know the token of the master give

sudo docker swarm join-token worker

👉To know if a instance is a master or not, give sudo docker info. Here you can see IS manager as true if the instance is a manager.

Swarm in Practical

Service: A group of tasks that run on multiple worker nodes.

Task: A single instance of a container running on a worker node.

Worker node: A machine that is part of the swarm and runs tasks created by the swarm manager.

Master node: The node that acts as the manager of the swarm and responsible for maintaining the desired state of the services running on the swarm.

🧊 Created 3 Instances. 1 master and 2 workers (all the instances acts as nodes)

Open all the instances in command prompt by connecting thru SSH.

🧊 In order to use Docker Swarm, you will need to install Docker on both the master and worker instances.

This will allow the instances to communicate and work together as part of the swarm.

perform in all the instances:

#update the system

sudo apt-get update

#install docker

sudo apt-get install docker.io

🧊 Once Docker is installed on all instances, you can use the docker swarm init command on the master node to initialize the swarm.

The instance which is initiated by docker swarm init command becomes the master/leader node.

Master Node,

Currently docker swarm has only one node which is itself. As mentioned above that you can use sudo docker node ls to list the no.of nodes present in the master node.

Here we have only one node. Lets add other worker nodes into the master node.

🧊 Now we should add all the workers into the swarm.

As mentioned above to know the token of the master give

sudo docker swarm join-token worker

Use this command in the worker nodes to get added into the swarm.

👉 Before that allow the port 2377 in the worker instances( got to security groups and add)

Worker nodes,

Check if the worker nodes were added in the master as below

In master,

Now worker nodes were successfully became part of the docker swarm.👍😀

🧊 Now Lets perform a task in our swarm.

Manager node creates the service

Worker nodes runs the task

We will use django todo application. We will run this application in docker swarm and see if this will runs in all the workers.

For django application image use image from dockerhub.

I have one image in my docker hub.

Here aasifa/django-todo-app-img:latest will become the image name

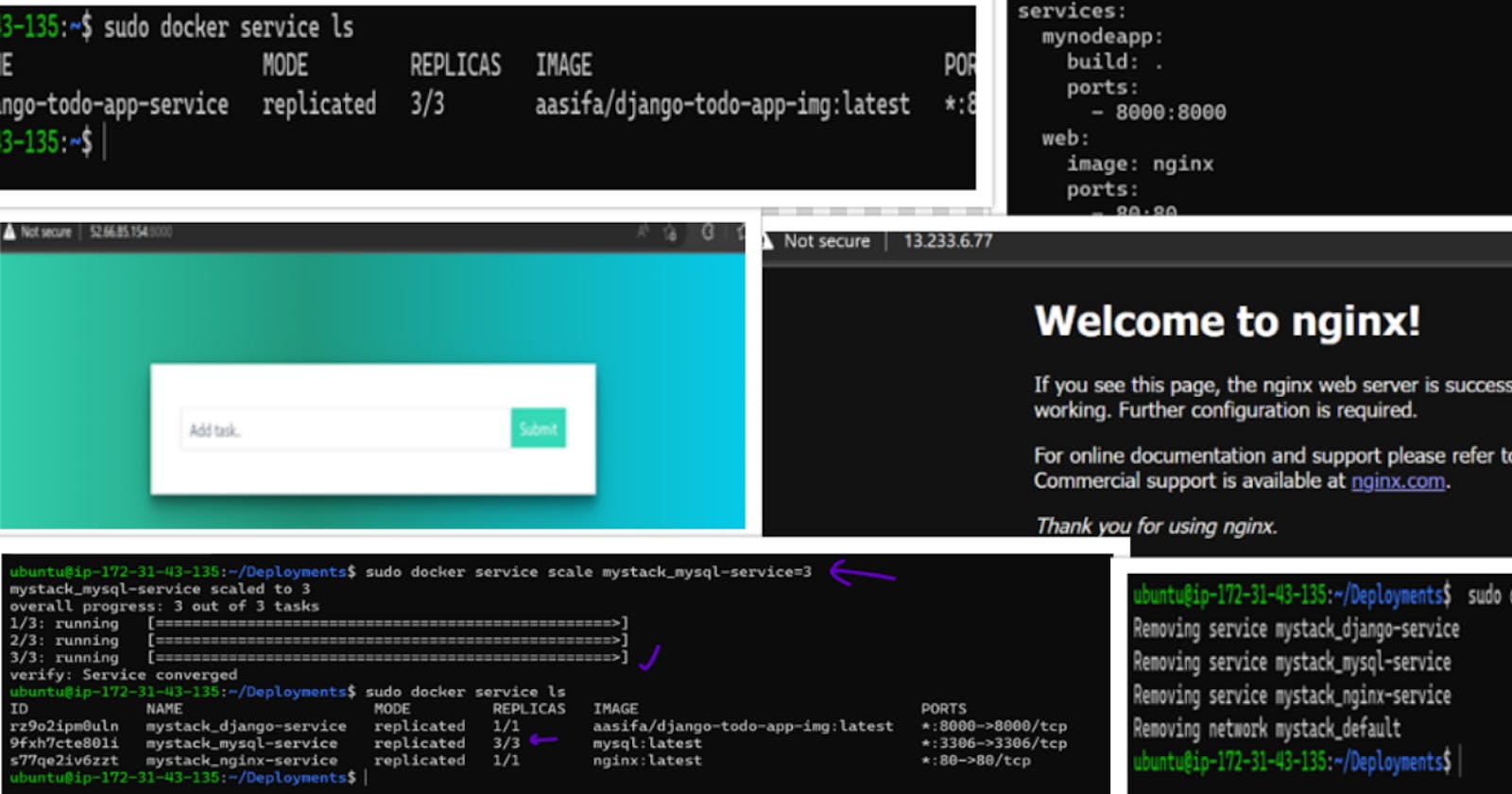

sudo docker service create --name django-todo-app-service --replicas 3 --publish 8000:8000 aasifa/django-todo-app-img:latest

Now service is converged which means master distributed/replicated this images to all the workers.

To check if services are running use

sudo docker service ls

Now the container will run on 3 machines 😀

use

sudo docker ps

🧊 Lets see if the app is deployed in all of these machines.

Give ipaddress of the nodes:8000

Our image is running in all the nodes 😁

🧊 To leave the swarm, use

sudo docker swarm leave

Additional points to know about swarm

🧊 If we kill the container in either master node or worker node the app will be still in running. Unless you stop the entire service in master.

🧊 If we leave one worker the containers which were running in that worker will be lost. It mean worker can self resign.

🧊 You can multiple images and container in the swarm (backed,frontend and database)

🧊 service -> Impacts the nodes

🧊 Taks -> wont impact the nodes unless worker leave the swarm

About Replicas

In Docker Swarm, replicas are used to ensure that a specified number of tasks for a service are running at all times. When you create or update a service, you can specify the desired number of replicas for that service. The swarm manager will then ensure that the specified number of tasks are running on worker nodes. If a task stops running for any reason, the swarm manager will automatically schedule a new task to be started on a different worker node to maintain the desired number of replicas.

The replica count can be set while creating a service using docker service create command, or you can use docker service update command to change the replica count of a service that is already running.

For example, to create a service named "web" with 5 replicas, you can use the following command:

Copy codedocker service create --replicas 5 --name web nginx

To scale the replicas of a service, for example, to increase the replica count of the service "web" from 5 to 10 replicas, you can use the following command:

Copy codedocker service update --replicas 10 web

It's worth noting that the replica count is just a desired state, the actual number of running task could be less if the worker nodes don't have enough resources or if there are some failures.

Also, it's important to keep in mind that the replicas are spread across the worker nodes, so that if one worker node goes down, the replicas running on that node will be rescheduled on other nodes, ensuring that the desired number of replicas is running at all times.

Docker stack

As of now we have deployed a single image aasifa/django-todo-app-img:latest.

But what if we want to deploy group of services which includes images. We can use Docker stack for that. How 🤔?

Lets implement it.😉

We will use YAML file to take multiple images.

YAML file

A YAML (YAML Ain't Markup Language) file is a file format that is commonly used to define configuration files, such as for services in a Docker Swarm.

Here is an example of a simple YAML file that can be used to create a service in a Docker Swarm:

version: "3.7"

services:

web:

image: nginx:latest

ports:

- "80:80"

deploy:

replicas: 5

resources:

limits:

cpus: "0.1"

memory: 50M

update_config:

parallelism: 2

delay: 10s

Now we will create our own YAML file.

I have created a simple YAML file which includes our todo app image, nginx and MySQL images from dockerhub.

💡You can see that we are arranging service upon a service. This is nothing but stack.

🧊 A Docker stack is a group of services that are deployed together as a single unit in a Docker Swarm. We need to deploy this docker stack.

sudo docker stack deploy -c stack-cluster.yaml mystack

🧊 You can see that it lists the services but the containers will run based on working status/health of the nodes.

to check the services running

sudo docker service ls

Example

django container is running in worker 2 on port 8000

nginx container is running in worker 1 on port 80

MySQL is running on other worker on port 3306

🧊 Now if we want to scale up the replicas for a particular service

Master will ensure that all tasks are running on different worker nodes. If one of the tasks fails or is stopped, the manager nodes will automatically schedule a new task to be started on a different worker node to maintain the desired number of replicas.

sudo docker service scale servicename=2

One is running in master

one is in worker 2

one is in worker 3

🧊 To view the logs of the service

sudo docker service logs mystack_mysql-service(service name)

To remove the stack (mystack is stack name)

sudo docker stack rm mystack

Thats all about stack 😇

---Thank you for your valuable time. See you in my next blog🙂---